CASE STUDY

Virtual Student Achievement using Metrics

Online education uses a myriad of software applications to educate remote students in virtual classrooms. These systems provide an array of data about these students. Mining and using this data in different ways can improve the academic achievement of the students. However, this data is often difficult to access and view at-a-glance where metrics and analytics could be of more value to teachers in real-time.

This case study looks at the capabilities of Student Achievement Systems’ Pulse, a cloud-based software tool that integrates with those systems—such as Learning Management Systems (LMS) and Student Information Systems—to deliver that real-time, at-a-glance view of student metrics and analytics. Specifically, we’ll look at how Pulse is used by the Berrien Springs Public School District in southwest Michigan, and how the academic achievement — improved student grades, retention, graduation rates, and other criteria—was raised.

The Challenge

Software systems used in the traditional learning experience are tailored and very specifically designed for the task at hand. A Learning Management System (LMS) delivers the course information and a curriculum to the students, and provides teachers with feedback on the student. A Student Information System (SIS) manages students in terms of names, demographics, grades, and attendance. Other software systems such as Excel, Word, Outlook, Evernote, Facebook, Google docs, provide ancillary solutions to these processes.

The combined and coordinated use of these different systems works sufficiently in a real-time, in-person physical classroom. Teachers are able to monitor their students’ individual well-being in large part by seeing them in class every day, interacting with them, and observing their behavior. The major challenge faced by online schools, virtual classrooms, and remote learning was articulated by Berrien Springs’ Director of Quality Assurance: “Virtual Learning has one major flaw: relational distance. Somehow, administrators need to be able to verify that staff and students are continually connecting so that critical relational quality between them is maintained.”

The Solution

With the Director of QA’s assessment being a major driver toward finding a solution to the student achievement monitoring problem, Berrien Springs began using Pulse, the central feature of Pulse is a clear, at-a-glance dashboard that provides powerful student data for an entire class, pulling data directly from the Learning Management Systems, including multiple LMS’. Row-by-row, instructors can quickly scan across student data as it happens. With this accurate data stream from Pulse, there’s no sifting through records hoping to figure out when the last touch-point occurred. Instead, data points that show student progress, schedules, and communications are delivered up-to-the-moment, allowing teachers to make on-target decisions to best help students.

In addition to the Dashboard, Pulse has tools to aid in Learner Management, including a Teacher Log, Student Information, the Student’s Plan, Transcript, Support & Intervention, Messaging & Chat, and Reporting.

The Results

The Quality Assurance (QA) Team at Berrien Springs Public Schools evaluate student metrics and recommends individual and holistic improvements throughout the student’s organizational life cycle: Pre-enrollment, enrollment, learning environment, graduation, post-enrollment. The goal is to identify needs, design studies, implement pilots, collect data, report results, and interpret results to drive change.

The QA Team began by identifying four points of data to get a full understanding of the educational organization prior to putting school improvement strategies into place. Via integration with Berrien Springs’ LMS and SIS, Pulse provided all of this information for the virtual education setting.

Demographic Data

- This includes: gender, students with disabilities, English Language Learners, Free and Reduced Lunch, ethnicity, community characteristics, etc.

- Pulse can separate data into subgroups to show gaps, strengths and growth edges.

- Who are in our subgroups and how have they sifted over time?

- What do our subgroups value and need to be successful?

- Perception Data/Surveys

- Quality of service and meeting the needs of student, parents, and staff.

- Are the results of our surveys and questions reality or perception?

- Survey data collection drives change and organizational improvement.

- Achievement Data

- Pulse metrics report the success rates and points to areas needing attention:

- Attendance / Logged in rate

- Student Communications

- Communication rate, quality, effectiveness

- Student time on task

- Course progress, grades, and completion rates

- Trend and goal comparison data

- Pulse metrics report the success rates and points to areas needing attention:

- Process Data

- Analysis of current processes based on a data feedback loop. What processes should be created or changed in order to improve the metrics?

- Analyzing Implementation, systems, training, etc.

See the product behind this Case Study! Request a demo today.

Example

The QA team knew that communication had a direct impact on student achievement, particularly in communication to students about their coursework, and about. their individual well-being. When looking at the dashboard of data compiled by Pulse, the team identified that there were some instructors who were communicating at a highly effective level; and some that were performing less effectively. An analysis of over 8,000 staff and student logs was performed to determine why some staff were highly successful with student engagement, and why other staff were less successful.

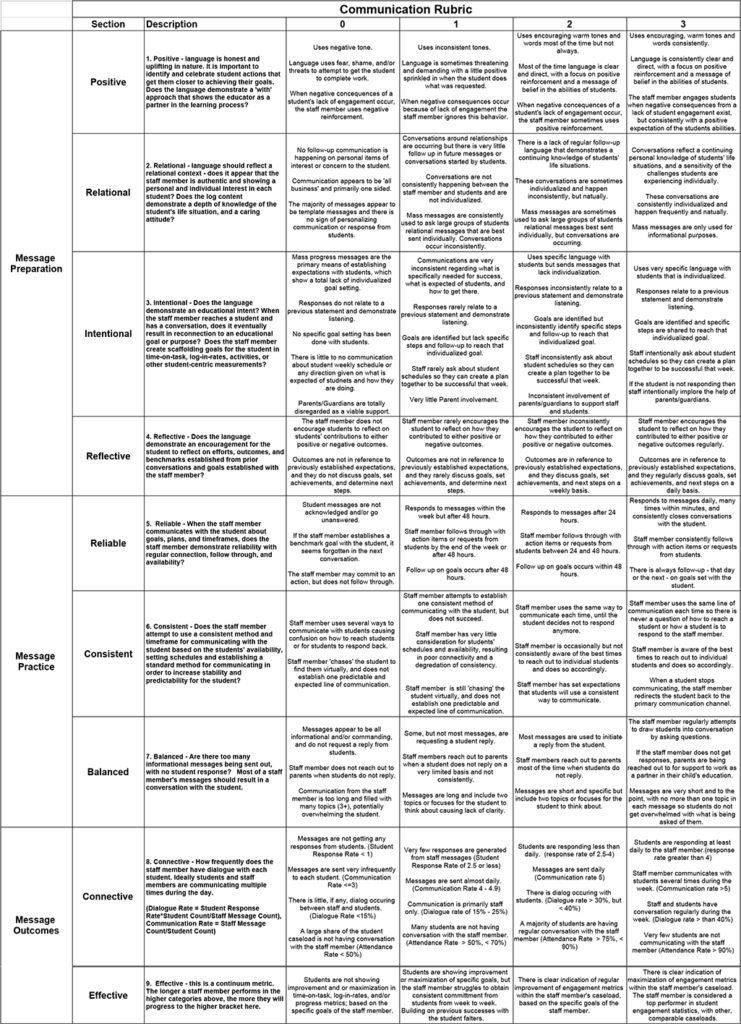

The QA Team set out to Identify communication behaviors that were present in highly effective staff as compared to less effective staff to create a rubric for self-evaluation, to improve communication skills.

A review of the metrics available in Pulse provided a top down view of how the school was doing by organization, region, building, and staff member. The data revealed that there were top-performing and bottom-performing staff. This discovery drove a dive deeper into the behavior attributes these staff members had in their student communications, to see if there was a difference.

The team’s hypothesis was that communication is key to establishing relationships in a virtual learning environment, and that student behavior will make or break success in virtual learning. The team also believed that virtual learning success can be increased by behavioral conditioning. And, that low achievement is typically due to lack of engagement and/or motivation, both of which, can be addressed.

Furthermore, teachers and mentors have a significant influence on addressing these success factors. Staff habits and processes make a difference in creating and molding relationships with students, and there is a direct correlation of results with teachers who have pro-active habits and processes, and those who are more passive and disengaged. On top of that, in a virtual learning environment, all of these engagement routines—or lack thereof—must be performed without an in-person, captive classroom audience.

Data characteristics made up our tiered metrics system, which correlates to Michigan’s Multi-Tiered Systems of Supports (MTSS) framework.

If students fell in a certain tier, they were displaying specific characteristics or student behaviors that could be impacted.

There was a direct correlation between higher student. success and progress based on the number of times he/she logged into their LMS per week; and/or the number of hours they were engaged in their coursework (Time on Task).

Question: Were staff using student-friendly language that they could respond to, such as logging in frequency and time on task, to give direct guided goals for students to reach?

The QA team recognized that, by looking at the Pulse Metrics/Dashboard, some staff had relatively high communication frequency statistics, but low student engagement statistics, as their attempt to connect was not resulting in positive student engagement results. The team decided that it was time to study actual conversation practices by comparing staff effectiveness at increasing student engagement to those who were falling short in this area. Pulse automatically records student/parent/staff text-based conversation in Teacher Logs. Those Logs were reviewed in a QA team-facilitated research study. The following ranking and selection processes were used.

- Gathered staff level dashboard data for all weeks in March 2020

- Sorted staff by student engagement stats-average progress, response rate, time on task

- Top 8 performing staff members and bottom 4 performing staff members were selected

- Communication Logs were consolidated for each selected member (+8000 messages)

- QA Team read conversation logs and began interpreting the results

- Invited top selected staff members to review our findings and provide insight

- Compiled results

Tier Level

Log In Rate

Time on Task

Tier 1

4+ times per week

+14 hour per week

Tier 2

3 times per week

12 hours per week

Tier 3

1-2 Times per week

4-12 hours per week (avg. 8)

Tier 4 (totally un-engaged)

0 Times per week

0 hours per week

Findings

There are nine (9) indicators of success that fall within three larger categories around communication. All 9 indicators can be influenced and impacted both positively and negatively by the adult performing them. The study also concluded that staff do not by default know how to communicate effectively with the written word, and this should not be assumed. Most educators were trained for an in-person environment, and teaching to a “captive audience,” which is different than interacting via written messages and feedback.

The following are the nine indicators that were found in the most effective messaging; and were missing from the less effective messaging practices in the 8,000+ logs reviewed. “What’s working” to communicate effectively with students became very clear:

Message Prep: Preparing what to say

- Positive

- Relational

- Intentional

- Reflective

Message Practice: Fair and Consistent

- Reliable

- Consistent

- Balanced

Message Outcome: Effectiveness

- Connective

- Effective

A rubric was created to show what communication really looks like—if it is ineffective, minimally effective, effective, or highly effective in all of these areas:

Conclusion

This direction improved communication, so remote student relationships could be positively impacted and developed. The Berrien Springs team found that students would become engaged intrinsically: Once engaged, they would be motivated to complete coursework and acquire credits so they could obtain their diploma and graduate. This rubric can be used…

- as a self-evaluation for individual improvement,

- to determine professional development needs, and

- setting school improvement goals.

Communication drives relationships. Relationships drive connection. Connection drives engagement. Engagement drives achievement. The Pulse tool from Student Achievement Systems makes communication, connection and engagement easier to monitor, maintain, and optimize.

Before March 2020 and the onset of the COVID-19 pandemic, educational institutions we already expanding their offerings to include more online learning options. Now, even as the pandemic conditions gradually subside in 2021, online learning, virtual classrooms and remote students will be a significant, permanent, and growing segment of the educational landscape in the future.

For more information about Pulse, please complete the form below to request a conversation and product demo. Or call Student Achievement Systems directly at 616-209-8806, or e-mail info@accountabilitypulse.com.